唠嗑几句

一天没写笔记,都生了,都不知道怎么起手了,不过说实话,我Markdown确实用的不够熟练啊,还得多熟悉熟悉,现在有大语言模型,对这些基础的倒是学起来很快,因为直接问就好了,就会给个案例,其实我只是想一点一点把这些过程整个都走一遍好好了解一下,然后做成笔记,这样可以让我印象深刻,忘记了还可以回来看自己写的。

利用openai库实现流形式回复

代码

# 导入必要的库

import openai # 用于与 OpenAI API 交互

import os # 用于操作系统相关功能(本例中未直接使用)

import sys # 用于系统相关功能(本例中未直接使用)

# 设置你的 API 密钥

openai.api_key = '' # 替换为你的实际 API 密钥

# 修改 API 的 base URL

openai.api_base = '' # 设置自定义的 API 基础 URL

# 定义与 GPT 进行流式对话的函数

def chat_with_gpt_stream(messages):

try:

# 创建流式响应

stream = openai.ChatCompletion.create(

model="gpt-4o-mini", # 指定使用的模型

messages=messages, # 传入对话历史

max_tokens=1000, # 控制返回的最大字符数

temperature=0.7 # 调整回答的创造性

stream=True # 启用流式输出

)

# 用于存储完整的响应

full_response = ""

# 逐块处理流式响应

for chunk in stream:

# print("chunk",chunk,end="")

# 检查 chunk 中是否包含有效的选择

if 'choices' in chunk and len(chunk['choices']) > 0:

# 从 chunk 中提取内容,获取当前响应片段的文本内容

# 通过访问 'choices' 列表的第一个元素,获取 'delta' 字典

# 如果 'delta' 存在,则从中获取 'content' 字段

# 如果 'content' 不存在,则返回空字符串

delta = chunk['choices'][0].get('delta', {})

content = delta.get('content', '')

if content:

# 将内容添加到完整响应中

full_response += content

# 实时打印内容:

# content: 要打印的文本片段

# end='': 不在末尾添加换行符,使输出连续

# flush=True: 立即刷新输出缓冲区,确保内容实时显示

print(content, end='', flush=True)

print() # 打印换行,结束流式输出

return full_response # 返回完整的响应

except Exception as e:

# 如果发生错误,打印错误信息

print(f"发生错误: {str(e)}")

return None # 返回 None 表示出错

# 主程序

if __name__ == "__main__":

print("开始与 GPT-4o mini 互动(流式响应模式),输入 'exit' 退出")

# 初始化对话历史

messages = [{"role": "system", "content": "You are a helpful assistant."}]

# 开始对话循环

while True:

# 获取用户输入

user_input = input("\n你: ")

# 检查是否退出

if user_input.lower() == "exit":

break

# 将用户输入加入对话历史

messages.append({"role": "user", "content": user_input})

# 打印 GPT 响应的开头,准备接收流式响应

print("\nGPT: ", end='', flush=True)

# 获取 GPT 的流式回复

response = chat_with_gpt_stream(messages)

if response:

# 如果成功获得响应,将 GPT 的回复加入对话历史

messages.append({"role": "assistant", "content": response})

else:

# 如果未能获取回复,打印错误信息

print("无法获取 GPT 的回复,请检查您的网络连接或 API 密钥。")

效果

理解

可以看到,我们在调用ChatCompletion.create 方法时,里面多带了一个参数stream=True 这就相当于告诉api开启流输出,对比之前是直接从response获取结果变成了循环stream 面向API编程是这样的,就加了几个参数然后加个for循环就成了,然后对返回的数据校验一下,好像流形式的数据结构是会有点不一样。

流形式回应

# 创建流式响应

stream = openai.ChatCompletion.create(

model="gpt-4o-mini", # 指定使用的模型

messages=messages, # 传入对话历史

max_tokens=1000, # 控制返回的最大字符数

temperature=0.7, # 调整回答的创造性

stream=True # 启用流式输出

)

# 用于存储完整的响应

full_response = ""

# 逐块处理流式响应

for chunk in stream:

if 'choices' in chunk and len(chunk['choices']) > 0:

delta = chunk['choices'][0].get('delta', {})

content = delta.get('content', '')

if content:

full_response += content

print(content, end='', flush=True)

直接响应

response = openai.ChatCompletion.create(

model="gpt-4o-mini",

messages=messages, # 传递整个对话历史

max_tokens=1000, # 控制返回的最大字符数

temperature=0.7 # 调整回答的创造性

)

print("messages===>:",messages)

print("response===>:",response)

return response['choices'][0]['message']['content']数据结构对比

这么一对比,好像数据结构大部分是相同的只有choices里的参数有点不一样,message变成了delta还有少了usage统计部分,在流形式时,最后会返回finish_reason参数为stop就代表结束了,哦~说实话我这会没认真在看,目前就发现这么多,应该也就这么多?是的就这么多,我反复对比了一下,去掉了注释。

流形式数据结构

{

"id": "chatcmpl-89CBDwRT8tl6nN8dTAj8GV5cMoD2z",

"object": "chat.completion.chunk",

"model": "gpt-4o-mini",

"created": 1729091177,

"choices": [

{

"index": 0,

"delta": {

"role": "assistant",

"content": ""

},

"finish_reason": null

}

]

}

{

"id": "chatcmpl-89CBDwRT8tl6nN8dTAj8GV5cMoD2z",

"object": "chat.completion.chunk",

"model": "gpt-4o-mini",

"created": 1729091177,

"choices": [

{

"index": 0,

"delta": {

"content": "\u4f60\u597d"

},

"finish_reason": null

}

]

}

你好{

"id": "chatcmpl-89CBDwRT8tl6nN8dTAj8GV5cMoD2z",

"object": "chat.completion.chunk",

"model": "gpt-4o-mini",

"created": 1729091177,

"choices": [

{

"index": 0,

"delta": {

"content": "\uff01"

},

"finish_reason": null

}

]

}

// 我没把所有JSON贴上来,贴了一部分,这是结尾,可以发现finish_reason是stop那就是代表结束了

{

"id": "chatcmpl-89CBDwRT8tl6nN8dTAj8GV5cMoD2z",

"object": "chat.completion.chunk",

"model": "gpt-4o-mini",

"created": 1729091177,

"choices": [

{

"index": 0,

"delta": {},

"finish_reason": "stop"

}

]

}

直接响应数据结构

{

"id": "chatcmpl-89D1bsACi3mm1eQofzau41ubWFyFZ",

"object": "chat.completion",

"created": 1728824619,

"model": "gpt-4o-mini",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "你好!有什么我可以帮助你的吗?"

},

"finish_reason": "stop"

}

],

"usage": {

"prompt_tokens": 16,

"completion_tokens": 9,

"total_tokens": 25

}

}直接请求API实现流形式回复

这里我直接使用基础的requests库进行请求,就不贴对比了,基本上都差不多,我倒是挺在乎在Java中是怎么实现这种流形式的,好像这个数据结构也有点不一样,我贴出来看看。

代码

import requests

import json

# 设置 API 密钥和基础 URL

API_KEY = '' # 替换为你的实际 API 密钥

API_BASE = '' # API 的基础 URL

def chat_with_gpt_stream(messages, max_tokens=1000, temperature=0.7):

# 设置 HTTP 请求头

headers = {

'Content-Type': 'application/json',

'Authorization': f'Bearer {API_KEY}'

}

# 准备请求数据

data = {

'model': 'gpt-4o-mini',

'messages': messages,

'stream': True, # 启用流式输出

'max_tokens': max_tokens,

'temperature': temperature

}

try:

# 发送 POST 请求到 API,使用 stream=True 参数来启用流式响应

response = requests.post(f'{API_BASE}/chat/completions',

headers=headers,

json=data,

stream=True)

# 检查响应状态,如果不是 200 OK,则抛出异常

response.raise_for_status()

full_response = "" # 初始化一个字符串来存储完整的响应

# 逐行处理流式响应

for line in response.iter_lines():

if line: # 如果行不为空

# print(line)

# 将字节串解码为 UTF-8 字符串

line = line.decode('utf-8')

# 检查是否是数据行(SSE 格式的特征)

if line.startswith('data: '):

# 解析 JSON 数据,去掉 'data: ' 前缀

data = json.loads(line[6:])

# 检查是否到达流的结束

if data['choices'][0]['finish_reason'] is not None:

break # 如果到达结束,跳出循环

# 从响应中提取文本内容

content = data['choices'][0]['delta'].get('content', '')

if content: # 如果有内容

full_response += content # 将内容添加到完整响应中

# 实时打印内容:

# content: 要打印的文本片段

# end='': 不在末尾添加换行符,使输出连续

# flush=True: 立即刷新输出缓冲区,确保内容实时显示

print(content, end='', flush=True)

print() # 打印换行,结束流式输出

return full_response # 返回完整的响应

except requests.exceptions.RequestException as e:

print(f"发生错误: {str(e)}") # 如果发生请求异常,打印错误信息

return None # 返回 None 表示出错

if __name__ == "__main__":

print("开始与 GPT-4o mini 互动(流式响应模式),输入 'exit' 退出")

# 初始化对话历史

messages = [{"role": "system", "content": "You are a helpful assistant."}]

# 开始对话循环

while True:

user_input = input("\n你: ")

if user_input.lower() == "exit":

break

# 将用户输入加入对话历史

messages.append({"role": "user", "content": user_input})

print("\nGPT: ", end='', flush=True)

# 获取 GPT 的流式回复

response = chat_with_gpt_stream(messages)

if response:

# 将 GPT 的回复加入对话历史

messages.append({"role": "assistant", "content": response})

else:

print("无法获取 GPT 的回复,请检查您的网络连接或 API 密钥。")

数据结构

哦数据结构就是一样的,只是在处理的时候是从第6个字符后开始读取JSON的,如果finish_reason不是空的,那就跳出循环了,也就是说只有到结束的时候finish_reason才有参数才是stop 否则不会跳出循环,感觉这个判断里面再加一层判断会比较好,不过不加也没事。

GPT: b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"role":"assistant","content":""},"finish_reason":null}]}'

b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe4\xbd\xa0\xe5\xa5\xbd"},"finish_reason":null}]}'

你好b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xef\xbc\x81"},"finish_reason":null}]}'

!b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe6\x9c\x89\xe4\xbb\x80\xe4\xb9\x88"},"finish_reason":null}]}'

有什么b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe6\x88\x91"},"finish_reason":null}]}'

我b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe5\x8f\xaf\xe4\xbb\xa5"},"finish_reason":null}]}'

可以b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe5\xb8\xae\xe5\x8a\xa9"},"finish_reason":null}]}'

帮助b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe4\xbd\xa0"},"finish_reason":null}]}'

你b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xe7\x9a\x84\xe5\x90\x97"},"finish_reason":null}]}'

的吗b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{"content":"\xef\xbc\x9f"},"finish_reason":null}]}'

?b'data: {"id":"chatcmpl-89CLSXbsLl9CwyKbqy8EvJDRHpDbX","object":"chat.completion.chunk","model":"gpt-4o-mini","created":1729091988,"choices":[{"index":0,"delta":{},"finish_reason":"stop"}]}'Java通过请求API实现流对话效果

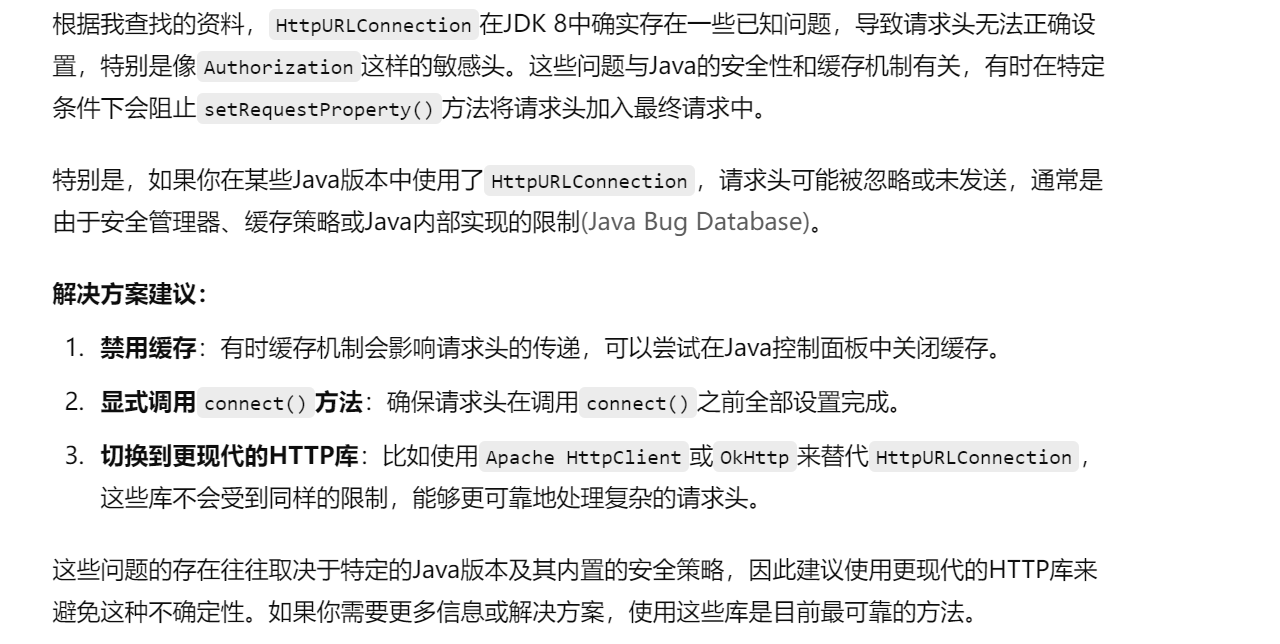

我一开始用HttpURLConnection 去处理发起请求,然后遇到了setRequestProperty 设置请求头不生效的情况,Authorization 死活不生效,问了GPT后才知道,原来是和Java的安全性和缓存机制有关,具体我贴一张GPT的回复

JDK 11+

于是我就用了JDK17来写,因为在JDK11引入了HttpClient 是真的好用多了...

public class OpenAIDemoSteamAPI {

// 定义API密钥,需要替换成你实际的密钥

private static final String API_KEY = "";

// 定义API基础的URL

private static final String API_BASE = "";

// 创建ObjectMapper对象,用于处理JSON

private static final ObjectMapper objectMapper = new ObjectMapper();

// 创建HttpClient对象,设置超时时间为30秒

private static final HttpClient httpClient = HttpClient.newBuilder()

.connectTimeout(Duration.ofSeconds(120))

.build();

// 定义GPT进行流式对话的方法

public static String chatWithGPT(List<Message> messages, String model, int maxTokens, double temperature, boolean isStream) {

// 创建StringBuilder对象,用于存储完整的响应

StringBuilder fullResponse = new StringBuilder();

try {

// 创建请求体

String requestBody = createRequestBody(messages,model,maxTokens, temperature, isStream);

// 构建HTTP请求

HttpRequest request = HttpRequest.newBuilder()

.uri(URI.create(API_BASE + "/chat/completions"))

.header("Content-Type", "application/json")

.header("Authorization", "Bearer " + API_KEY)

.POST(HttpRequest.BodyPublishers.ofString(requestBody))

.build();

// 打印请求信息

// System.out.println("请求URL:" + request.uri());

// System.out.println("请求头:");

// request.headers().map().forEach((key, values) -> System.out.println(key + ": " + values));

// System.out.println("请求体:" + requestBody);

if (isStream) {

// 发送请求并获取响应

HttpResponse<Stream<String>> response = httpClient.send(request, HttpResponse.BodyHandlers.ofLines());

// 检查响应状态码

int statusCode = response.statusCode();

if (statusCode != 200) {

// 如果状态码不是200,打印错误信息并返回

System.out.println("HTTP错误: " + statusCode);

System.out.println("响应: " + response.body().collect(Collectors.joining("\n")));

return null;

}

// 处理成功的响应

response.body()

.forEach(line -> {

// 打印原始行,用于调试

// System.out.println("原始行: " + line);

// 检查是否是数据行

if (line.startsWith("data: ")) {

String jsonLine = line.substring(6).trim();

// 检查是否是结束标记

if ("[DONE]".equals(jsonLine)) {

// System.out.println("\n流结束");

return;

}

try {

// 解析JSON

JsonNode jsonNode = objectMapper.readTree(jsonLine);

// 提取content字段

String content = jsonNode.at("/choices/0/delta/content").asText();

if (!content.isEmpty()) {

// 将内容添加到完整响应中

fullResponse.append(content);

// 实时打印内容

System.out.print(content);

System.out.flush();

}

// 检查是否到达流的结束

// if ("stop".equals(jsonNode.at("/choices/0/finish_reason").asText())) {

// System.out.println("\n");

// }

} catch (Exception e) {

// 捕获并打印JSON解析错误

System.out.println("\n解析响应时发生错误:" + e.getMessage());

}

}

});

} else {

// 非流式处理

HttpResponse<String> response = httpClient.send(request, HttpResponse.BodyHandlers.ofString());

if (response.statusCode() != 200) {

System.out.println("HTTP错误: " + response.statusCode());

System.out.println("响应: " + response.body());

return null;

}

// 解析JSON

JsonNode jsonNode = objectMapper.readTree(response.body());

// 提取content字段

String content = jsonNode.at("/choices/0/message/content").asText();

if (!content.isEmpty()) {

fullResponse.append(content);

System.out.print(content);

}

}

} catch (Exception e) {

// 如果发生IO异常,打印错误信息

System.out.println("发生错误:" + e.getMessage());

return null;

}

//返回完整的响应字符串

return fullResponse.toString();

}

// 创建请求体的方法

private static String createRequestBody(List<Message> messages, String model,int maxTokens, double temperature, boolean isStream) {

try {

// 使用record类型(JDK 16+特性)创建请求对象

// record ChatRequest(String model, List<Message> messages, boolean stream, int max_tokens,

// double temperature) {

// }

ChatRequest request = new ChatRequest(model, messages, isStream, maxTokens, temperature);

// 使用ObjectMapper将请求对象转换为JSON字符串

return objectMapper.writeValueAsString(request);

} catch (Exception e) {

throw new RuntimeException("创建请求体失败", e);

}

}

// 主方法

public static void main(String[] args) {

System.out.print("开始与 GPT 互动(流式响应模式),输入 'exit' 退出");

// 初始化消息列表

List<Message> messages = new ArrayList<Message>();

// 添加系统消息

messages.add(new Message("system", "You are a helpful assistant."));

// 使用try-with-resources自动关闭Scanner

try (Scanner scanner = new Scanner(System.in)) {

// 开始对话循环

while (true) {

// 提示用户输入

System.out.print("\n你: ");

// 读取用户输入

String userInput = scanner.nextLine();

// 如果用户输入"exit",则退出循环

if ("exit".equalsIgnoreCase(userInput)) {

break;

}

// 将用户输入添加到消息列表

messages.add(new Message("user", userInput));

// 打印GPT响应的前缀

System.out.print("GPT: ");

boolean userStream = false;

// 调用chatWithGPTStream方法获取GPT的响应

String response = chatWithGPT(messages,"gpt-4o-mini",3000, 0.7, userStream);

if (null != response && !response.isEmpty()) {

messages.add(new Message("assistant", response));

} else {

// 如果响应为空,打印错误信息

System.out.println("无法获取 GPT 的回复,请检查您的网络连接或 API 密钥。");

}

}

}

}

}JDK8+

至于在JDK8版本中,我就用了okhttp库

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp</artifactId>

<version>5.0.0-alpha.14</version>

</dependency>

<dependency>

<groupId>com.squareup.okhttp3</groupId>

<artifactId>okhttp-sse</artifactId>

<version>5.0.0-alpha.14</version>

</dependency>public class OpenAiDemo {

// 定义API密钥,需要替换成你实际的密钥

private static final String API_KEY = "";

// 定义API基础的URL

private static final String API_BASE = "";

// 创建ObjectMapper对象,用于处理JSON

private static final ObjectMapper objectMapper = new ObjectMapper();

// 创建okHttpClient对象

private static final OkHttpClient okHttpClient = new OkHttpClient.Builder()

.connectTimeout(120, TimeUnit.SECONDS) // 连接超时

.readTimeout(120, TimeUnit.SECONDS) // 读取超时

.writeTimeout(120, TimeUnit.SECONDS) // 写入超时

.callTimeout(120, TimeUnit.SECONDS) // 整个调用的超时(包括DNS解析、连接、写入请求体、服务器处理、读取响应体)

.build();

// 定义GPT对话方法

private static String chatWithGPT(List<Message> messages, String model, int maxTokens, double temperature, boolean isStream) {

// 创建StringBuilder对象,用于存储完整的响应

StringBuilder fullResponse = new StringBuilder();

try {

// 构建请求参数

String body = createRequestBody(messages, model, maxTokens, temperature, isStream);

// 构建请求体,设置内容类型为JSON

RequestBody requestBody = RequestBody.create(

body,

MediaType.get("application/json; charset=utf-8")

);

// 构建HTTP请求,设置URL、请求头和请求体

Request request = new Request.Builder()

.url(API_BASE + "/chat/completions")

.addHeader("Content-Type", "application/json")

.addHeader("Authorization", "Bearer " + API_KEY)

.post(requestBody)

.build();

// 执行请求并获取响应

try (Response response = okHttpClient.newCall(request).execute()) {

// 检查响应是否成功

if (!response.isSuccessful()) {

System.out.println("HTTP错误: " + response.code());

return null;

}

// 获取响应体

ResponseBody responseBody = response.body();

if (isStream) {

// 处理流式响应

try (BufferedReader reader = new BufferedReader(new InputStreamReader(responseBody.byteStream()))) {

String line;

while ((line = reader.readLine()) != null) {

if (line.startsWith("data: ")) {

String jsonLine = line.substring(6).trim();

if ("[DONE]".equals(jsonLine)) {

break;

}

try {

// 解析JSON响应

JsonNode jsonNode = objectMapper.readTree(jsonLine);

String content = jsonNode.at("/choices/0/delta/content").asText();

if (!content.isEmpty()) {

fullResponse.append(content);

System.out.print(content);

System.out.flush();

}

} catch (Exception e) {

System.out.println("\n解析响应时发生错误:" + e.getMessage());

}

}

}

}

} else {

// 处理非流式响应

String responseString = responseBody.string();

JsonNode jsonNode = objectMapper.readTree(responseString);

String content = jsonNode.at("/choices/0/message/content").asText();

fullResponse.append(content);

System.out.print(content);

}

}

} catch (Exception e) {

// 如果发生异常,打印错误信息

System.out.println("发生错误:" + e);

return null;

}

// 返回完整的响应字符串

return fullResponse.toString();

}

private static String createRequestBody(List<Message> messages, String model, int maxTokens, double temperature, boolean isStream) {

// 构建请求体

try {

ChatRequest request = new ChatRequest(model, messages, isStream, maxTokens, temperature);

return objectMapper.writeValueAsString(request);

} catch (Exception e) {

throw new RuntimeException("创建请求体失败", e);

}

}

public static void main(String[] args) {

System.out.print("开始与 GPT 互动(流式响应模式),输入 'exit' 退出");

// 初始化消息列表

List<Message> messages = new ArrayList<Message>();

// 添加系统消息

messages.add(new Message("system", "You are a helpful assistant."));

// 使用try-with-resources自动关闭Scanner

try (Scanner scanner = new Scanner(System.in)) {

// 开始对话循环

while (true) {

// 提示用户输入

System.out.print("\n你: ");

// 读取用户输入

String userInput = scanner.nextLine();

// 如果用户输入"exit",则退出循环

if ("exit".equalsIgnoreCase(userInput)) {

break;

}

// 将用户输入添加到消息列表

messages.add(new Message("user", userInput));

// 打印GPT响应的前缀

System.out.print("GPT: ");

boolean userStream = true;

// 调用chatWithGPTStream方法获取GPT的响应

String response = chatWithGPT(messages, "gpt-4o-mini", 3000, 0.7, userStream);

if (null != response && !response.isEmpty()) {

messages.add(new Message("assistant", response));

} else {

// 如果响应为空,打印错误信息

System.out.println("无法获取 GPT 的回复,请检查您的网络连接或 API 密钥。");

}

}

}

}

}

用到的实体类

package com.openaidemo.model;

import lombok.AllArgsConstructor;

import lombok.Data;

import java.util.List;

@Data

@AllArgsConstructor

public class ChatRequest {

// 使用的模型名称

private String model;

// 对话消息列表

private List<Message> messages;

// 是否需要流式响应

private boolean stream;

// 最大tokens数

private int max_tokens;

// 温度参数,控制输出的随机性

private double temperature;

}

package com.openaidemo.model;

import lombok.AllArgsConstructor;

import lombok.Data;

@Data

@AllArgsConstructor

public class Message {

// 消息角色(如:"system","user","assistant")

private String role;

// 消息内容

private String content;

}

在用JDK17的时候,发现一个新特性,我觉得挺好啊,但是还是习惯性建实体类来映射数据了

// 使用record类型(JDK 16+特性)创建请求对象

// record ChatRequest(String model, List<Message> messages, boolean stream, int max_tokens,

// double temperature) {

// }